Member-only story

How to implement the derivative of Softmax independently from any loss function

The main job of the Softmax function is to turn a vector of real numbers into probabilities.

The softmax function takes a vector as an input and returns a vector as an output. Therefore, when calculating the derivative of the softmax function, we require a Jacobian matrix, which is the matrix of all first-order partial derivatives.

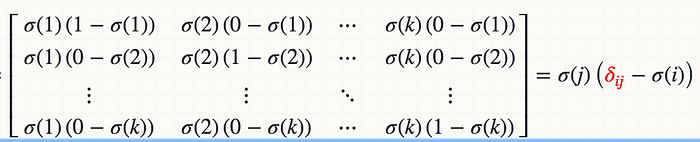

In math formulas, the derivative of Softmax σ(j) with respect to the logit Zi (for example, Wi*X) is written as:

where the red delta is a Kronecker delta.

How can we put this into code?

If you implement this iteratively in python:

import numpy as np

def softmax_grad(s):

# Take the derivative of softmax element w.r.t the each logit which is usually Wi * X

# input s is softmax value of the original input x.

# s.shape = (1, n)

# i.e. s = np.array([0.3, 0.7]), x = np.array([0, 1])

# initialize the 2-D jacobian matrix.

jacobian_m = np.diag(s)

for i in range(len(jacobian_m)):

for j in range(len(jacobian_m)):

if i == j:

jacobian_m[i][j] = s[i] * (1-s[i])

else:

jacobian_m[i][j] = -s[i] * s[j]

return jacobian_m